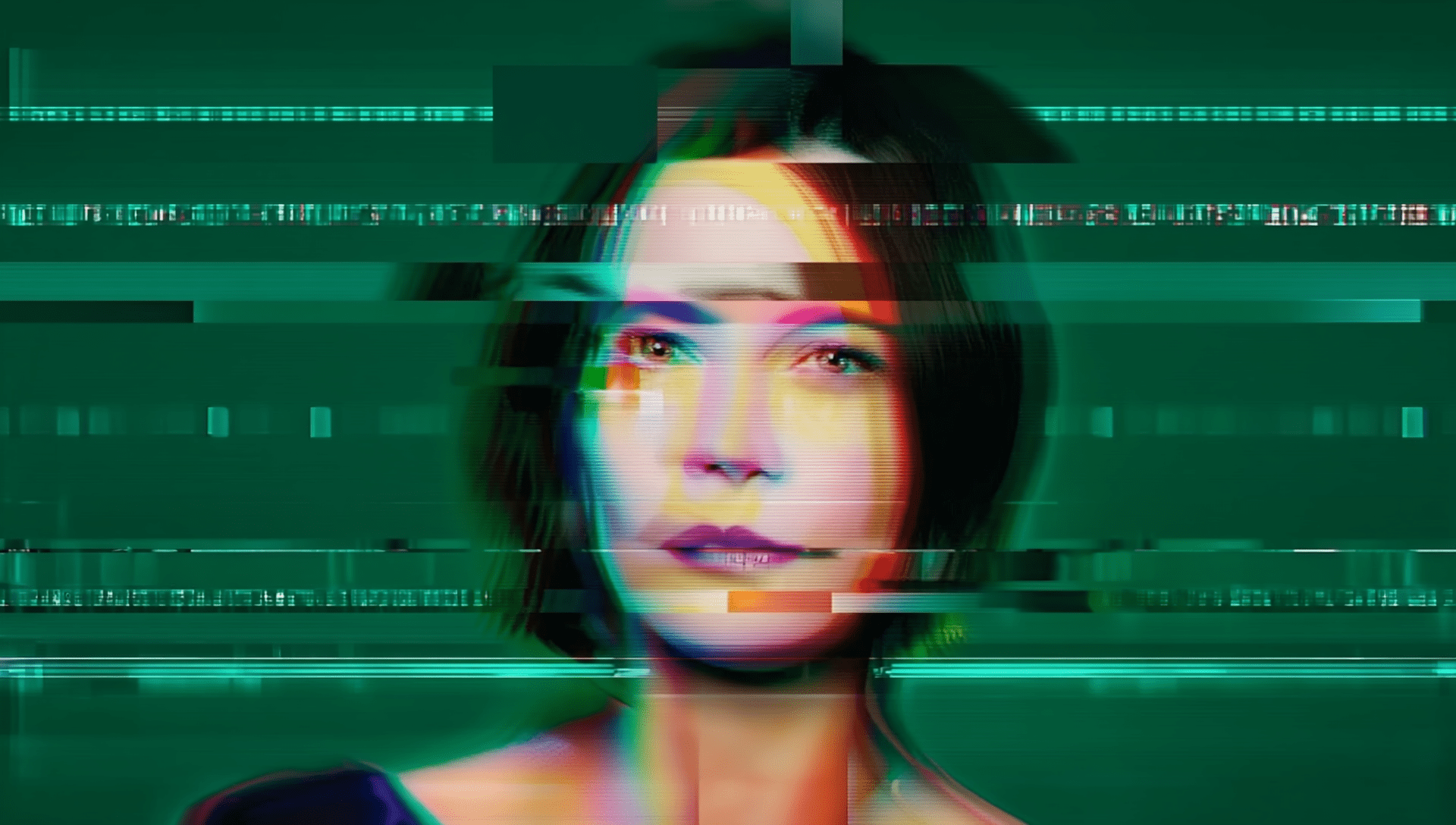

Deepfake Impersonation in Remote Hiring

Deepfake impersonation is reshaping insider threats in remote hiring. Learn how AI-generated applicants are bypassing interviews – and how to stop them.

Remote hiring has transformed how enterprises access talent. It has also created a new vector for insider threats.

As organizations expand their talent pools through virtual hiring, attackers are exploiting the same infrastructure to gain access to corporate systems. Using artificial intelligence, threat actors can now generate highly realistic personas designed to impersonate job candidates. These synthetic applicants are not just falsifying resumes or embellishing credentials. They are deepfake-driven constructs engineered to pass interviews and embed themselves inside organizations.

This emerging threat represents a shift in how adversaries gain privileged access. Instead of compromising or manipulating existing employees, they are now fabricating entirely new ones.

How Deepfake Impersonation Works

A deepfake impersonation attack begins with the theft of real identity data. From there, the attacker uses AI-powered video and voice synthesis tools to create a convincing digital facsimile of a real person. These tools can simulate natural facial expressions, speech, and eye movements in real time.

During a video interview, the synthetic applicant appears authentic. They respond to questions, make eye contact, and display typical conversational behavior. In reality, the person on screen does not exist. Behind the scenes, a threat actor is manipulating the digital avatar to gain the trust of interviewers.

Once hired, they receive valid credentials, legitimate access to systems, and the ability to operate undetected within the organization. From this position, they can exfiltrate sensitive data, deploy malware, or surveil internal communications for future exploitation.

One of the first widely reported cases of deepfake impersonation occurred in 2024 during a targeted attack on KnowBe4. A North Korean threat actor used a stolen identity and AI-generated video to impersonate a job applicant during remote interviews. The synthetic candidate successfully passed multiple stages of the hiring process and was onboarded as a legitimate employee. Shortly after being hired, the attacker attempted to deploy malware from within the organization – exposing a serious blind spot in the cybersecurity firm’s hiring processes.

Why This Threat Is Different

Historically, adversaries relied on manipulating or compromising existing employees to gain internal access. Deepfake impersonation changes that approach. Attackers no longer need to coerce, bribe, or socially engineer someone on the inside. Instead, they can create entirely synthetic identities, pass as legitimate hires, and operate under authentic credentials.

Remote hiring platforms, originally designed to improve access to talent and streamline recruiting, have become a new surface for social engineering attacks. The onboarding process – once seen as a routine HR function – is now a critical vulnerability in the enterprise.

What makes this threat especially dangerous is its potential for longevity and repeatability. Once a synthetic insider is embedded within an organization, they may remain active for months or even years before detection. They attend meetings, build relationships, and participate in normal workflows – all while advancing an adversary’s goals.

Unlike traditional social engineering attacks, which often require real-time interaction and effort, deepfake-driven impersonation can be automated, distributed, and executed at scale. A single attacker could target multiple companies using variations of the same synthetic persona.

This is not a one-time attack. It is a repeatable method for long-term compromise.

The Risk Across the Enterprise

Once hired, a synthetic insider has access to the same tools, systems, and data as any legitimate employee. Their activities blend into normal workflows, making detection extremely difficult. The risk spans business functions and industries.

Key areas of concern include:

- Engineering: Source code theft, backdoor insertion, and disruption of development pipelines

- Finance: Unauthorized access to transactions, payment systems, and financial planning data

- Healthcare: Exposure of patient records, research data, or regulatory filings

- Legal and Compliance: Surveillance of sensitive documents and policy communications

- Executive Operations: Monitoring of strategic planning, board communications, and M&A activity

The more privileged the role, the greater the potential for harm.

Why Detection Is Difficult

Most identity verification methods used in hiring are built to validate documents and confirm background information – not to detect AI-generated video or voice. The hiring process operates under a critical assumption: that the person on the other side of the screen is who they claim to be. Deepfake impersonation exploits this trust.

Even after onboarding, traditional security tools often fall short. SIEMs, endpoint protection platforms, and access control systems are designed to monitor behavior and flag anomalies – not to confirm whether the user is real. A synthetic insider, operating carefully, may not trigger any alerts at all.

The burden then falls on HR and recruiting teams, who are often not trained to detect digital forgery or assess the authenticity of video interviews. Without advanced detection capabilities, organizations may not realize they have been infiltrated until significant damage has occurred.

Defending Against Deepfake Impersonation in Remote Hiring

Defending against this threat requires more than legacy security awareness training or phishing simulations. With the right layered defenses in place, even advanced deepfake impersonation becomes manageable. Detection tools like Reality Defender play a critical role, blocking most AI-generated threats before they ever reach the user. But no detection system is perfect – and that’s why securing the user layer is essential.

Dune Security ensures your workforce can handle what detection technology might miss. By simulating real-world attacks, scoring user risk, and adapting remediation based on that risk, Dune helps organizations prevent deepfake-driven social engineering before it takes hold. With extended visibility into overlooked workflows like remote hiring, Dune delivers measurable resilience at the user layer — ensuring that trusted business processes, from interviews to everyday communication, no longer become entry points for compromise.

Key Takeaways

Featured Speakers

Never Miss a Human Risk Insights

Subscribe to the Dune Risk Brief - weekly trends, threat models,and strategies for enterprise CISOs.

FAQs

Complete the form below to get started.

A deepfake is synthetic media created with artificial intelligence that manipulates video, audio, or images to convincingly mimic a real person. In cybersecurity, deepfakes are used to impersonate trusted individuals, making fraudulent communications, interviews, or approvals appear authentic.

Deepfakes threaten remote hiring because they allow attackers to pose as job candidates in video interviews. By mimicking someone’s face or voice, criminals can bypass identity checks, gain employment under false pretenses, and exploit access to systems or data once inside the organization.

Deepfake impersonation in hiring can allow attackers to infiltrate an organization under false identities. Once onboarded, they can steal sensitive data, plant malware, or abuse insider access. This not only exposes the enterprise to financial and operational damage but also increases regulatory and reputational risk.

Deepfake risks cross both human resources and cybersecurity. HR teams manage the hiring process, but security teams provide the tools and expertise to detect synthetic media and respond to incidents. Collaboration ensures hiring remains both efficient and secure.

Interviewers should be trained to spot deepfake red flags such as lip-sync issues, unnatural blinking, lighting glitches, or robotic voices. They should also use simple real-time tests like head turns or object interactions. Organizations should back this with clear policies, playbooks, and access to detection tools so suspicious cases are escalated quickly.

Deepfakes, DMs, and Deception: Dune Security on Human Cyber Risk

Dune Security’s CEO and SHI’s field CISO discuss how AI, multi-channel attacks, and user risk are transforming cybersecurity and how to adapt defenses effectively.

How Social Engineering Exploits Human Behavior in Enterprises

Learn how social engineering weaponizes human behavior and organizational trust, turning routine business processes into costly avenues for enterprise compromise.

Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’

A finance worker at a multinational firm was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company’s chief financial officer in a video conference call, according to Hong Kong police.

Never Miss a Human Risk Insights

and strategies for enterprise CISOs.